C4 - 05: RESIDENT EVALUATION OF THIRD-YEAR MEDICAL STUDENTS CLOSELY RESEMBLES PERFORMANCE ON STANDARDIZED EXAM: AN ASSESSMENT OF PERFORMANCE DURING THE SURGERY CLERKSHIP

Alessandra Landmann, MD, Jason S Lees, MD, Tabitha Garwe, PhD, Jennifer D Clark, Zoona Sarwar, Arpit Patel, Md; University of Oklahoma Health Sciences Center

Introduction: Assessing medical student performance while on the surgical clerkship presents challenges, with grading based on subjective evaluations rather than objective medical knowledge. Here we analyzed student evaluations to gauge how they correlate with performance on standardized examination. We hypothesized that subjective assessments of students differ between residents and attending physicians.

Methods: A retrospective review of medical student evaluations while on the eight-week general surgery clerkship were collected. Data was analyzed from the individual student evaluations by residents and attending physicians. At the conclusion of the rotation, the students were assessed with a standardized examination created by the National Board of Medical Examiners (i.e the shelf exam) which we used as the benchmark objective evaluation. The students were also placed into groups based on the chronologic order of the rotation during the academic year.

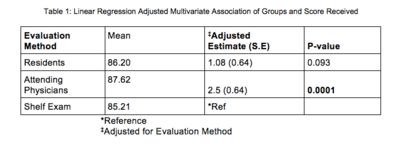

Results: Over the study period, from July 2016-June 2017, 127 third-year medical students rotated through Department of General Surgery. These rotations were divided into six 8-week blocks. The results of their performance are demonstrated in Table 1. We found a statistically significant difference between resident and attending physician evaluations (p=0.02). No significant difference exists between resident evaluations and performance on the shelf exam (p=0.4) whereas we noted a significant difference between attending physician evaluations and the shelf exam (p=0.05). On linear regression analysis, while controlling for other variables, attending assessments remained significantly higher compared to resident evaluations and performance on the shelf exam. We also noted that overall scores significantly (p=0.002) increased as the academic year progressed.

Conclusions: Resident non-clinical roles often include the evaluation and education of medical students. Here, we demonstrated that residents more accurately assess medical student when compared to performance on standardized exams. Further studies will be needed to elucidate the cause for this correlation; however, based on our data, we believe that residents should continue to maintain an important role of in the evaluation of medical students, even as non-faculty educators.