PS2 - 03: MIDTERM OSCE PREDICTS STUDENT PERFORMANCE ON NBME SHELF EXAMINATION AND CLINICAL PERFORMANCE

David D Odell, MD, MMSc, Jonathan Fryer, Trevor Barnum, Jason Burke, Amy Halverson; Northwestern University

Purpose: Accurate evaluation of medical student skills during the surgical clerkship can prove difficult and has traditionally relied upon standardized tools such as the National Board of Medical Examiners (NBME) Shelf examination. However, test metrics do not necessarily provide accurate information on all aspects of surgical patient care. Objective structured clinical examinations (OSCE) are assessment tools which are meant to evaluate interpersonal skills and the process of care in addition to the fund of knowledge. We sought to determine whether OSCE examination scores could predict clinical performance of medical students in a general surgery clerkship.

Methods: We developed an OSCE scenario presenting a 33 year old male with acute appendicitis. The scenario was given to students at the mid-point of the 8-week third year surgical clerkship (midterm OSCE) as a formative exercise. Students were asked to perform a focused history and physical examination with a standardized patient and to discuss their differential diagnosis and management plan. Performance was then assessed by both the standardized patient and independent observers and formally scored. We compared Midterm OSCE scores with end of rotation performance on the NBME Shelf examination, scores on formal end of rotation OSCE examinations, and clinical performance evaluations received for each student at the completion of their clinical rotation. Correlation with the clinical performance evaluation served as the primary endpoint for the study.

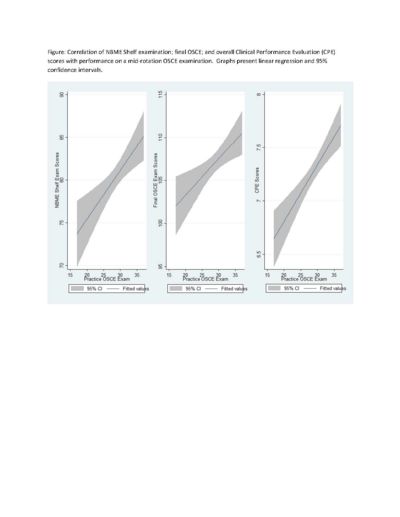

Results: The midterm OSCE was given to 149 students during the 12 month study period. The mean score on the examination was 28.7 of a possible 40 points (range 17-37). Student performance on the midterm OSCE examination correlated with end of rotation performance on the NBME Shelf examination (p=0.0015) as well as the final OSCE examination (p=0.0056). High midterm OSCE scores most strongly predicted strong clinical performance evaluations (p<0.0000). Midterm written examinations were less accurate in predicting a student’s clinical performance (p=0.0629).

Conclusions: Midterm OSCE examinations predict student performance in both didactic examinations and clinical care settings and may be more accurate than written examinations for this purpose. The use of a midterm OSCE examination may be more valuable for identification of at-risk students than standardized testing.